Single file system to LVM using rsync

Rsync from single filesystem to LVM on Ubuntu

(in this case on an EC2 instance)

NOTE: This needs another round of testing to ensure it’s accurate and flows. If you’re up for giving it a test, please post a comment and let me know how it goes or if you ran into any issues! I’ll remove this when I know things are working well.

I’ve been playing around with Amazon EC2 instances for a number of years, even running Plex on one before Amazon did away with their unlimited cloud drive pricing. There were some issues with streaming starts with that configuration because of the delay in getting data to Plex, but it was a pretty cool set up.

One of the issues with EC2 instances out-of-the-box is a single monolithic filesystem. This is probably fine for more ephemeral servers in EC2, but might be problematic when you don’t have console access as is the case with EC2. It’s just safer to give applications like Apache and the database their own filesystem beyond /, as well as /tmp, /var (mostly for logfiles), and of course /boot. I tend to add in /home, and sometimes /usr.

One of the powers of LVM is you can easily add or expand the underlying physical volume to add space to the volume group, which can then be allocated to the individual logical volumes to grow them as needed. I haven’t found a way to boot a Linux server without at least one drive with a partition to accommodate the booting process, so this stifles one of the powers of LVM on virtualization, which is being able to hot-expand the drive in the hypervisor and then do the same within the server to immediately have more space available to allocate to filesystems. If I get around to needing to expand my EBS and dealing with the partition, I’ll do another write-up of how to do that without moving to a new EBS.

Assumptions

I may come back and do write-ups on how to spin up an EC2 instance, deal with snapshots, creating new AMIs, and various clean-ups in AWS, as well as how to set up and manage LVM, but for now I’m going to assume you can figure this out as needed.

It’s worth pointing out that this works best with data that’s more static, vs a server that’s highly utilized. In my case, I was able to make the transition without any downtime, but that’s because my site wasn’t heavily used and I was able to spin up a new server to take the place of the old one, and the switch-over was redirecting my elastic IP to point at the new server. If your server is under load with significant filesystem changes (like with a database), you will need to take a downtime to shut everything down to do the final copy and retirement of the server. If it’s just a webserver with nothing except log files, you can do this change without any downtime.

Process

To test, I spun up a number of EC2 spot instances created from snapshots. If you have a production machine you want to do this on, I recommend using EC2’s ability to create an image from a running instance to create a new server to work on. If all goes well, the server you spin up will be the one that goes live.

1. Create an EC2 instance to work with, best if it’s the server you want to migrate since then all the data will be there.

2. Attach a new EBS that’s large enough to accommodate the entire filesystem of the server, plus any spare capacity you might want down the line. Since you pay per-GB for EBS, don’t go too crazy (I wish you only paid for what you used, because then I’d recommend making the volume large so you don’t have to deal with the partition table later).

Ingest this new volume into the system, partition with a small boot partition (I went with 15M, but it probably could be smaller), and create the volume group for it (I’m leaving LVM management to another post, although the below are the commands you’ll need).

#pvcreate #vgcreate

3. Create the logical volumes and format the filesystems. Here’s what I ran:

lvcreate -L 1G -n lvswap vgweb01 lvcreate -L 1G -n lvtmp vgweb01 lvcreate -L 1G -n lvhome vgweb01 lvcreate -L 3G -n lvroot vgweb01 lvcreate -L 2G -n lvvar vgweb01 lvcreate -L 4G -n lvwww01 vgweb01 lvcreate -L 2G -n lvmysql01 vgweb01 lvcreate -L .5G -n lvboot vgweb01

mkfs.ext4 /dev/mapper/vgweb01-lvhome

mkfs.ext4 /dev/mapper/vgweb01-lvmysql01

mkfs.ext4 /dev/mapper/vgweb01-lvroot

mkfs.ext4 /dev/mapper/vgweb01-lvboot

mkfs.ext4 /dev/mapper/vgweb01-lvtmp

mkfs.ext4 /dev/mapper/vgweb01-lvvar

mkfs.ext4 /dev/mapper/vgweb01-lvwww01

mkswap /dev/vgweb01/lvswap

4. Add everything to fstab, which I did with echo commands to make the copy/paste easier. NOTE: I recommend significantly more caution if you’re doing this on a server that’s important and not a copy of an already running server!

echo "/dev/mapper/vgweb01-lvroot /newroot ext4 discard,defaults 0 1" >>/etc/fstab echo "/dev/mapper/vgweb01-lvboot /newroot/boot ext4 discard,defaults 0 2" >>/etc/fstab echo "/dev/mapper/vgweb01-lvhome /newroot/home ext4 discard,defaults 0 2" >>/etc/fstab echo "/dev/mapper/vgweb01-lvvar /newroot/var ext4 discard,defaults 0 2" >>/etc/fstab echo "/dev/mapper/vgweb01-lvtmp /newroot/tmp ext4 discard,defaults 0 2" >>/etc/fstab echo "/dev/mapper/vgweb01-lvmysql01 /newroot/var/lib/mysql ext4 discard,defaults 0 2" >>/etc/fstab echo "/dev/mapper/vgweb01-lvwww01 /newroot/var/www ext4 discard,defaults 0 2" >>/etc/fstab

5. Make and mount the filesystems in the following order, noting that there are filesystems within others necessitating the order (/var/lib/mysql lives on the /var filesystem):

mkdir /newroot mount /newroot mount /newroot/var mkdir /newroot/boot mount /newroot/boot mkdir /newroot/var/www mkdir /newroot/var/lib mkdir /newroot/var/lib/mysql mkdir /newroot/tmp mkdir /newroot/home mkdir /newroot/var

6. Mount everything else, and then check to see if everything looks good and/or deal with any errors that came up:

mount -a df -h

7. rsync worked best for the actual migration, which has the added advantage of being able to work from a remote server in case you need to get the latest-and-greatest from the server you’re replacing just ahead of a cutover. Rsync is smart, so you can run the below command as many times as you need to. Since I’m doing this on a copy of the server to convert, I can reference / as the source and know the -x parameter locks the transfer to the filesystem, without attempting to copy anything on the /newroot filesystem.

NOTE: If you have multiple filesystems on the original server, this command will need to be run for each filesystem, to move those files to the new structure.

rsync -axHAWXS --numeric-ids --info=progress2 / /newroot/

8. Chroot into the new filesystem to set up grub so we can actually boot, which starts with mounting some things from the main OS.

for i in /sys /proc /run /dev; do sudo mount --bind "$i" "/newroot$i"; done chroot /newroot

9. Update grub. In my case the root volume was on /dev/nvme1n1, although it may be /dev/xdxxx, or the more traditional /dev/sdX. I had a number of issues with this step, so I’ve tossed a few extra commands in there to ensure a few of the basis are covered when it comes to booting.

update-grub grub-install /dev/nvme1n1 grub-install --recheck /dev/nvme1n1 update-initramfs -u update-grub

10. fstab will need a clean-up, which is mainly going to involve removing /newroot from each entry. It’s also worth using blkid to find the UUID of the boot partition, and updating the /boot line to reflect the UUID. It will look something like the following, but with your local UUID.

#UUID=7e5c217f-34b9-4043-9842-f737c78498d4 /boot [....]

EC2 Shuffling

At this point your work on the new filesystem is complete, and the next steps are to take the EBS volume you used to create a new EC2 image. This is a little complicated, because you can’t directly make an AMI from an EBS volume, and you can’t mount and EBS volume as the root of an instance.

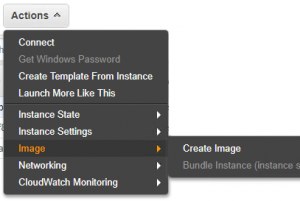

To accomplish this mounting, you need to take a snapshot of the EBS volume, and then on that snapshot, create a new AMI image. Once that’s done, you can launch a new EC2 instance using the AMI, validate anything is functional, and clean up the snapshots and AMIs you used since they’re very specific to your work to move the filesystems around.

If you have any issues with booting, EC2 has a Get System Log that populates after a few minutes that will give you some hints about what might have gone wrong (in my case I forgot to add /boot to fstab, so the server kept dropping to the CTRL-D emergency maintenance prompt).

Remember that if anything goes wrong, shut down your instance and go fix it on the server you were using to do the rsyncs. Since you can leave the EBS volume attached, the extra work is just in the snapshot/AMI/new instance steps of testing.

Good Luck!

P.S. — If you want to go pro on this, you can have two EBS volumes, one that acts as the boot device, and the second that can be used without partitioning in LVM. This gives you the ability to expand the drive in EC2, do a pvresize in the OS, and get more space. With a partition as described above, you probably don’t want to risk re-partitioning the drive live and are stuck adding another EBS volume you can add to volume group for the additional space.